This setup with utilize 5 servers. (you can get away with 4 but do your research on a 2 node MariaDB cluster) I'm using 3 freshly minted VM machines running debian 7 wheezy.

Cluster Node 1

hostname mariadb-00

IP address 10.0.10.10

Cluster Node 2

hostname mariadb-01

IP address 10.0.10.11

Cluster Node 3

hostname mariadb-02

IP address 10.0.10.12

Step 1 - Setting up MariaDB Servers Adding the MariaDB repository and installing the prerequisites

apt-key adv --recv-keys --keyserver keyserver.ubuntu.com 0xcbcb082a1bb943db

add-apt-repository 'deb http://mirror.aarnet.edu.au/pub/MariaDB/repo/10.0/debian wheezy main'

apt-get update

apt-get install python-software-properties rsync

apt-get install mariadb-galera-server galera |

Step 2 - Setting up MariaDB security The default installation is not very secure so we can improve on that. Start the mysqld service (yes MariaDB is still called mysql) on all nodes.

Next we need to run the mysql_secure_installation script so we can improve the security.

/usr/bin/mysql_secure_installation |

Go through the script and note down your new root password if you changed it.

Step 3 – Setup MariaDB Galera Cluster users Now we need to setup some users that must be able to access the database. The ‘sst_user’ is the user which a database node will use for authenticating to another database node in the State Transfer Snapshot (SST) phase. Execute the following command on all nodes:

mysql -u root -p

mysql> DELETE FROM mysql.user WHERE user='';

mysql> GRANT ALL ON *.* TO 'root'@'%' IDENTIFIED BY 'dbpass';

mysql> GRANT USAGE ON *.* to sst_user@'%' IDENTIFIED BY 'dbpass';

mysql> GRANT ALL PRIVILEGES on *.* to sst_user@'%';

mysql> FLUSH PRIVILEGES;

mysql> quit |

Please not that the ‘%’ means that the root or sst_user is allowed to access the database from any host. For production make it more secure by specifying the hostname(s) or IP addresses from which those users can access the database. Once the software has been installed, we can create the Galera configuration file: /etc/mysql/conf.d/cluster.cnf

[mysqld]

query_cache_size=0

binlog_format=ROW

default-storage-engine=innodb

innodb_autoinc_lock_mode=2

query_cache_type=0

bind-address=0.0.0.0

# Galera Provider Configuration

wsrep_provider=/usr/lib/galera/libgalera_smm.so

#wsrep_provider_options="gcache.size=32G"

# Galera Cluster Configuration

wsrep_cluster_name="CLUSTERNAME"

wsrep_cluster_address="gcomm://IPOFNODE,IPOFOHTERNODE"

# Galera Synchronization Congifuration

wsrep_sst_method=rsync

#wsrep_sst_auth=user:pass

# Galera Node Configuration

wsrep_node_address="CURRENTNODEIP"

wsrep_node_name="CURRNETNODE" |

Change the following lines to suit your configuration

wsrep_cluster_name="CLUSTERNAME"

wsrep_cluster_address="gcomm://IPOFNODE,IPOFOTHERNODE"

wsrep_node_address="CURRENTNODEIP"

wsrep_node_name="CURRNETNODE" |

Notice that we need to set the IP addresses for the wsrep_cluster_address to match the addresses of our two nodes, then on each node, we set use the local hostname and IP address in the last two lines. Complete all of the above steps on both nodes, and then we should stop the mysql service on both nodes:

Now we need to copy the contents of /etc/mysql/debian.cnf from node 1 to node 2 (there are some passwords in that file that need to match across both nodes once we’ve got them clustered). Once that’s complete, we can create the cluster. On the first node, we start the mysql service with as special argument to create the new cluster:

service mysql start --wsrep-new-cluster |

And on the second node, we start the usual way:

We will need to allow our HAProxy nodes access to the MariaDB servers for health checks. On one of the MySQL nodes, logon to MySQL as root and enter the following:

mysql -u root -p

grant all on *.* to root@'%' identified by 'password' with grant option;

insert into mysql.user (Host,User) values ('192.168.1.30','haproxy');

insert into mysql.user (Host,User) values ('192.168.1.31','haproxy');

flush privileges;

exit |

Notice that I specified the IP addresses of my HAProxy nodes, and I used the username ‘haproxy’. We don't' set a password for the haproxy user as it is restricted to your HAProxy hosts only. Setting up HAProxy and keepalived For this configuration, I’ve created two Ubuntu 14.04 servers (mine are virtual servers with two virtual cpus and 1 GB of RAM). You’d probably want to make these bigger in a production environment, depending on the number of concurrent connections you expect. I’ve given them hostnames and IP addresses: haproxy1 (192.168.1.30) haproxy2 (192.168.1.31) We’ll also need to allocate a third IP address to use as the virtual IP address (VIP). We’ll use 192.168.1.32. This will ultimately be the endpoint used to access the OpenStack services that we’ll build later. The first thing we need to do is to let the kernel know that we intend to bind additional IP addresses that won’t be defined in the interfaces file. To do that we edit /etc/sysctl.conf and add the following line: /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind=1 |

Then we run the following command to make this take effect without rebooting:

To install HAproxy on Debian Wheezy, you have to go through backports. First add backports in /etc/apt/sources.list :

deb http://ftp.debian.org/debian/ wheezy-backports main |

Then install haproxy:

apt-get update

apt-get install haproxy keepalived mysql-client |

Next, we define the keepalived configuration by creating the following file: /etc/keepalived/keepalived.conf

global_defs {

router_id haproxy1

}

vrrp_script haproxy {

script "killall -0 haproxy"

interval 2

weight 2

}

vrrp_instance 50 {

virtual_router_id 50

advert_int 1

priority 101

state MASTER

interface eth0

virtual_ipaddress {

192.168.1.32 dev eth0

}

track_script {

haproxy

}

} |

Notice there’s a few specific items that we need to set for this. I’ve set the router_id to be the hostname, and I’ve specified the VIP as 192.168.1.32. When you create this file on the second node, make sure to use the hostname of the second node. Next, we will define the HAProxy configuration: /etc/haproxy/haproxy.cfg

global

log /dev/log local0

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin

user haproxy

group haproxy

daemon

defaults

log global

option dontlognull

contimeout 5000

clitimeout 50000

srvtimeout 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen stats 192.168.1.30:80

mode http

option httplog

stats enable

stats uri /stats

stats realm HAProxy\ Statistics

stats auth admin:password

listen galera 103.29.172.121:3306

balance source

mode tcp

option tcpka

option mysql-check user haproxy

server em-mariadb-00 103.29.172.124:3306 check weight 1

server em-mariadb-01 103.29.172.125:3306 check weight 1 |

Notice that I’ve used the local IP address in the file in two locations, in the global section for the log location, and in the stats listener. When you setup the second node, make sure to use its IP address. Also notice the username and password in the status auth line. Set this to whatever you want. Then, you will be able to access the stats page via your browser. Now we need to enable HAProxy. To do this, edit the file /etc/default/haproxy and change ENABLED from 0 to 1: /etc/default/haproxy

# Set ENABLED to 1 if you want the init script to start haproxy.

ENABLED=1

# Add extra flags here.

#EXTRAOPTS="-de -m 16" |

Now we can restart the services:

service keepalived restart

service haproxy restart |

Auto start after reboot: Edit Default /etc/default/haproxy

# Set ENABLED to 1 if you want the init script to start haproxy.

ENABLED=1 |

Edit Init job: /etc/init.d/haproxy

HAPROXY=/usr/sbin/haproxy

EXTRAOPTS=

ENABLED=1 |

So the above covers the power generation and power storage. The MPPT controller will output 12V (for my initial testing) but can be switched to 24V if I need too.

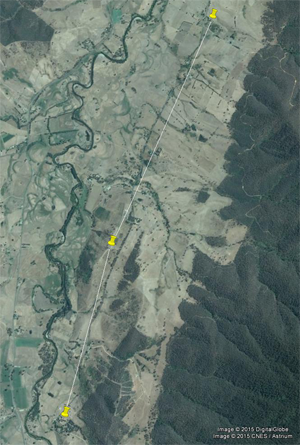

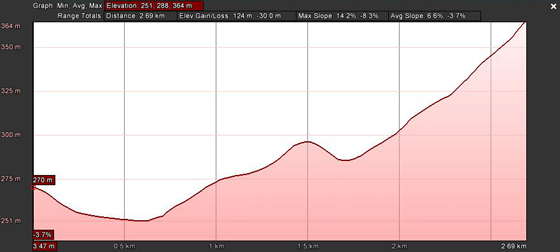

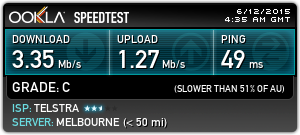

So the above covers the power generation and power storage. The MPPT controller will output 12V (for my initial testing) but can be switched to 24V if I need too. Having a little bit of knowledge about "the internet" I decided to roll my own wifi link. I initially looked at trying to connect back to the exchange that my phone line currently terminates too. Upon learning that it is only ADSL1 capable I ruled that out as an option.

Having a little bit of knowledge about "the internet" I decided to roll my own wifi link. I initially looked at trying to connect back to the exchange that my phone line currently terminates too. Upon learning that it is only ADSL1 capable I ruled that out as an option.